If you have a website, then you ought to know about Google Search Console. Formerly known as Google Webmaster Tools, this free software is like a dashboard of instruments that let you manage your site. Seriously, unless you prefer running your online business blindfolded, getting this set up should be any webmaster’s SEO priority. In our previous article, you learned step-by-step how to set up a Google Search Console account.

This next article is more advanced. We will be covering how to tie Analytics into your Search Console and understand the different areas of measurement for you to have complete control over your digital “Destiny.”

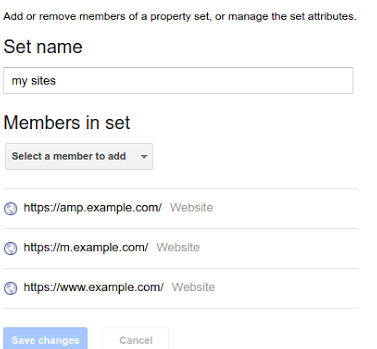

Google has launched ‘Property Sets’ within their Search Console allowing webmasters to combine apps and sites within a single group in order to monitor overall clicks and impressions within a single report. It will be rolling out to all users over the next couple of days. This is a great feature for those that have many subdomains as well.

Google provides these instructions to get started:

1. Create a property set

2. Add the properties you’re interested in

3. The data will start being collected within a few days

4. Profit from the new insights in Search Analytics!

Google adds:

Property Sets will treat all URIs from the properties included as a single presence in the Search Analytics feature. This means that Search Analytics metrics aggregated by host will be aggregated across all properties included in the set. For example, at a glance you’ll get the clicks and impressions of any of the sites in the set for all queries.

This feature will work for any kind of property in Search Console. Use it to gain an overview of your international websites, of mixed HTTP / HTTPS sites, of different departments or brands that run separate websites, or monitor the Search Analytics of all your apps together: all of that’s possible with this feature.

How to link Google Analytics with Google Search Console

Google Analytics and Google Search Console might seem like they offer the same information, but there are some key differences between these two Google products. GA is more about who is visiting your site—how many visitors you’re getting, how they’re getting to your site, how much time they’re spending on your site, and where your visitors are coming from (geographically-speaking). Google Search Console, in contrast, is geared more toward more internal information—who is linking to you, if there is malware or other problems on your site, and which keyword queries your site is appearing for in search results. Analytics and Search Console also do not treat some information in the exact same ways, so even if you think you’re looking at the same report, you might not be getting the exact same information in both places.

To get the most out of the information provided by Search Console and GA, you can link accounts for each one together. Having these two tools linked will integrate the data from both sources to provide you with additional reports that you will only be able to access once you’ve done that. So, let’s get started:

Has your site been added and verified in Search Console? If not, you’ll need to do that before you can continue.

From the Search Console dashboard, click on the site you’re trying to connect. In the upper right-hand corner, you’ll see a gear icon. Click on it, then choose “Google Analytics Property.”

This will bring you to a list of Google Analytics accounts associated with your Google account. All you have to do is choose the desired GA account and hit “Save.” Easy, right? That’s all it takes to start getting the most out of Search Console and Analytics.

Adding a sitemap

Sitemaps are files that give search engines and web crawlers important information about how your site is organized and the type of content available there. Sitemaps can include metadata, with details about your site such as information about images and video content, and how often your site is updated.

By submitting your sitemap to Google Search Console, you’re making Google’s job easier by ensuring they have the information they need to do their job more efficiently. Submitting a sitemap isn’t mandatory, though, and your site won’t be penalized if you don’t submit a sitemap. But there’s certainly no harm in submitting one, especially if your site is very new and not many other sites are linking to it, if you have a very large website, or your if site has many pages that aren’t thoroughly linked together.

Before you can submit a sitemap to Search Console, your site needs to be added and verified in Search Console. If you haven’t already done so, go ahead and do that now.

From your Search Console dashboard, select the site you want to submit a sitemap for. On the left, you’ll see an option called “Crawl.” Under “Crawl,” there will be an option marked “Sitemaps.”

Click on “Sitemaps.” There will be a button marked “Add/Test Sitemap” in the upper right-hand corner.

This will bring up a box with a space to add text to it.

Type “system/feeds/sitemap” in that box and hit “Submit sitemap.” Congratulations, you have now submitted a sitemap!

Having a website doesn’t necessarily mean you want to have all of its pages or directories indexed by search engines. If there are certain things on your site you’d like to keep out of search engines, you can accomplish this by using a robots.txt file. A robots.txt file placed in the root of your site tells search engine robots (i.e., web crawlers) what you do and do not want indexed by using commands known as the robots Exclusion Standard.

It’s important to note that robots.txt files aren’t necessarily guaranteed to be 100% effective in keeping things away from web crawlers. The commands in robots.txt files are instructions, and although the crawlers used by credible search engines like Google will accept them, it’s entirely possible that a less reputable crawler will not. It’s also entirely possible for different web crawlers to interpret commands differently. Robots.txt files also will not stop other websites from linking to your content, even if you don’t want it indexed.

If you want to check your robots.txt file to see exactly what it is and isn’t allowing, log into Search Console and select the site whose robots.txt file you want to check. Haven’t already added or verified your site in Search Console? Do that first.

On the left-hand side of the screen, you’ll see the option “Crawl.” Click on it and choose “robots.txt Tester.” The Robots.txt Tester Tool will let you look at your robots.txt file, make changes to it, and it alert you about any errors it finds. You can also choose from a selection of Google’s user-agents (names for robots/crawlers) and enter a URL you wish to allow/disallow, and run a test to see if the URL is recognized by that crawler.

If you make any changes to your robots.txt file using Google’s robots.txt tester, the changes will not be automatically reflected in the robots.txt file hosted on your site. Luckily, it’s pretty easy to update it yourself. Once your robots.txt file is how you want it, hit the “Submit” button underneath the editing box in the lower right-hand corner. This will give you the option to download your updated robots.txt file. Simply upload that to your site in the same directory where your old one was (www.example.com/robots.txt). Obviously, the domain name will change, but your robots.txt file should always be named “robots.txt” and the file needs to be saved in the root of your domain, not www.example.com/somecategory/robots.txt.

Back on the robots.txt testing tool, hit “Verify live version” to make sure the correct file is on your site. Everything correct? Good! Click “Submit live version” to let Google know you’ve updated your robots.txt file and they should crawl it. If not, re-upload the new robots.txt file to your site and try again.

Fetch as Google and submit to index

If you’ve made significant changes to a website, the fastest way to get the updates indexed by Google is to submit it manually. This will allow any changes done to things such as on-page content or title tags to appear in search results as soon as possible.

The first step is to sign into Google Search Console. Next, select the page you need to submit. If the website does not use the ‘www.’ prefix, then make sure you click on the entry without it (or vice versa.)

On the left-hand side of the screen, you should see a “Crawl” option. Click on it, then choose “Fetch as Google.”

If you need to fetch the entire website (such as after a major site-wide update, or if the homepage has had a lot of remodeling done) then leave the center box blank. Otherwise, use it to enter the full address of the page you need indexed, such as http://example.com/category. Once you enter the page you need indexed, click the “Fetch and Render” button. Fetching might take a few minutes, depending on the number/size of pages being fetched.

After the indexing has finished, there will be a “Submit to Index” button that appears in the results listing at the bottom (near the “Complete” status). You will be given the option to either “Crawl Only This URL,” which is the option you want if you’re only fetching/submitting one specific page, or “Crawl This URL and its Direct Links,” if you need to index the entire site.

Click this, wait for the indexing to complete, and you’re done! Google now has sent its search bots to catalog the new content on your page, and the changes should appear in Google within the next few days.

Site errors in Google Search Console

Nobody wants to have something wrong on their website, but sometimes you might not realize there’s a problem unless someone tells you. Instead of waiting for someone to tell you about a problem, Google Search Console can immediately notify you of any errors it finds on on your site.

If you want to check a site for internal errors, select the site you’d like to check. On the left-hand side of the screen, click on “Crawl,” then select “Crawl Errors.”

You will then be taken directly to the Crawl Errors page, which displays any site or URL errors found by Google’s bots while indexing the page.

Any URL errors found will be displayed at the bottom. Click on any of the errors for a description of the error encountered and further details.